databricks.MwsWorkspaces

Explore with Pulumi AI

Example Usage

Creating a Databricks on AWS workspace

!Simplest multiworkspace

To get workspace running, you have to configure a couple of things:

- databricks.MwsCredentials - You can share a credentials (cross-account IAM role) configuration ID with multiple workspaces. It is not required to create a new one for each workspace.

- databricks.MwsStorageConfigurations - You can share a root S3 bucket with multiple workspaces in a single account. You do not have to create new ones for each workspace. If you share a root S3 bucket for multiple workspaces in an account, data on the root S3 bucket is partitioned into separate directories by workspace.

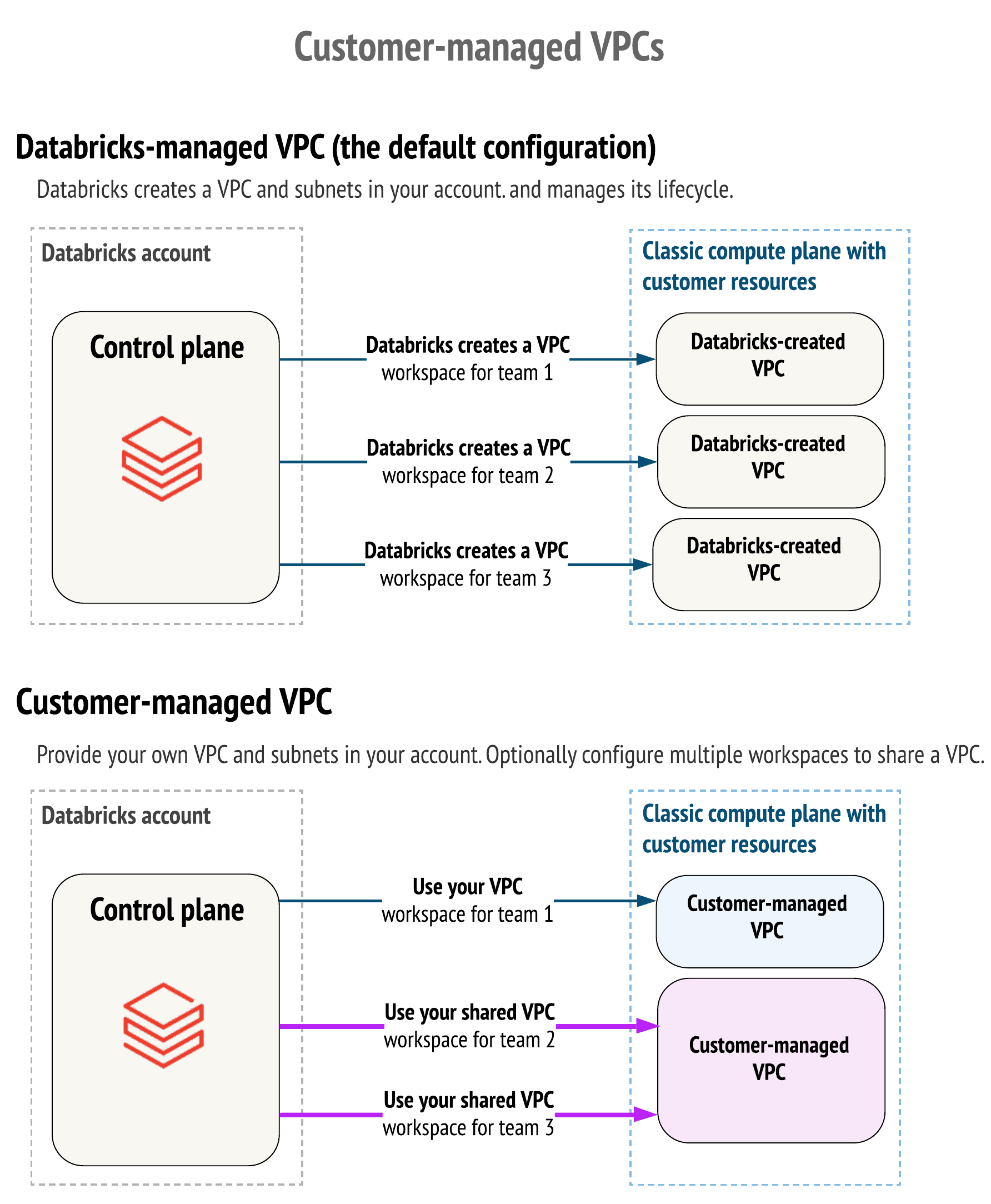

- databricks.MwsNetworks - (optional, but recommended) You can share one customer-managed VPC with multiple workspaces in a single account. You do not have to create a new VPC for each workspace. However, you cannot reuse subnets or security groups with other resources, including other workspaces or non-Databricks resources. If you plan to share one VPC with multiple workspaces, be sure to size your VPC and subnets accordingly. Because a Databricks databricks.MwsNetworks encapsulates this information, you cannot reuse it across workspaces.

- databricks.MwsCustomerManagedKeys - You can share a customer-managed key across workspaces.

import * as pulumi from "@pulumi/pulumi";

import * as databricks from "@pulumi/databricks";

const config = new pulumi.Config();

// Account ID that can be found in the dropdown under the email address in the upper-right corner of https://accounts.cloud.databricks.com/

const databricksAccountId = config.requireObject("databricksAccountId");

// register cross-account ARN

const _this = new databricks.MwsCredentials("this", {

accountId: databricksAccountId,

credentialsName: `${prefix}-creds`,

roleArn: crossaccountArn,

});

// register root bucket

const thisMwsStorageConfigurations = new databricks.MwsStorageConfigurations("this", {

accountId: databricksAccountId,

storageConfigurationName: `${prefix}-storage`,

bucketName: rootBucket,

});

// register VPC

const thisMwsNetworks = new databricks.MwsNetworks("this", {

accountId: databricksAccountId,

networkName: `${prefix}-network`,

vpcId: vpcId,

subnetIds: subnetsPrivate,

securityGroupIds: [securityGroup],

});

// create workspace in given VPC with DBFS on root bucket

const thisMwsWorkspaces = new databricks.MwsWorkspaces("this", {

accountId: databricksAccountId,

workspaceName: prefix,

awsRegion: region,

credentialsId: _this.credentialsId,

storageConfigurationId: thisMwsStorageConfigurations.storageConfigurationId,

networkId: thisMwsNetworks.networkId,

token: {},

});

export const databricksToken = thisMwsWorkspaces.token.apply(token => token?.tokenValue);

import pulumi

import pulumi_databricks as databricks

config = pulumi.Config()

# Account ID that can be found in the dropdown under the email address in the upper-right corner of https://accounts.cloud.databricks.com/

databricks_account_id = config.require_object("databricksAccountId")

# register cross-account ARN

this = databricks.MwsCredentials("this",

account_id=databricks_account_id,

credentials_name=f"{prefix}-creds",

role_arn=crossaccount_arn)

# register root bucket

this_mws_storage_configurations = databricks.MwsStorageConfigurations("this",

account_id=databricks_account_id,

storage_configuration_name=f"{prefix}-storage",

bucket_name=root_bucket)

# register VPC

this_mws_networks = databricks.MwsNetworks("this",

account_id=databricks_account_id,

network_name=f"{prefix}-network",

vpc_id=vpc_id,

subnet_ids=subnets_private,

security_group_ids=[security_group])

# create workspace in given VPC with DBFS on root bucket

this_mws_workspaces = databricks.MwsWorkspaces("this",

account_id=databricks_account_id,

workspace_name=prefix,

aws_region=region,

credentials_id=this.credentials_id,

storage_configuration_id=this_mws_storage_configurations.storage_configuration_id,

network_id=this_mws_networks.network_id,

token=databricks.MwsWorkspacesTokenArgs())

pulumi.export("databricksToken", this_mws_workspaces.token.token_value)

package main

import (

"fmt"

"github.com/pulumi/pulumi-databricks/sdk/go/databricks"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi/config"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

cfg := config.New(ctx, "")

// Account ID that can be found in the dropdown under the email address in the upper-right corner of https://accounts.cloud.databricks.com/

databricksAccountId := cfg.RequireObject("databricksAccountId")

// register cross-account ARN

this, err := databricks.NewMwsCredentials(ctx, "this", &databricks.MwsCredentialsArgs{

AccountId: pulumi.Any(databricksAccountId),

CredentialsName: pulumi.String(fmt.Sprintf("%v-creds", prefix)),

RoleArn: pulumi.Any(crossaccountArn),

})

if err != nil {

return err

}

// register root bucket

thisMwsStorageConfigurations, err := databricks.NewMwsStorageConfigurations(ctx, "this", &databricks.MwsStorageConfigurationsArgs{

AccountId: pulumi.Any(databricksAccountId),

StorageConfigurationName: pulumi.String(fmt.Sprintf("%v-storage", prefix)),

BucketName: pulumi.Any(rootBucket),

})

if err != nil {

return err

}

// register VPC

thisMwsNetworks, err := databricks.NewMwsNetworks(ctx, "this", &databricks.MwsNetworksArgs{

AccountId: pulumi.Any(databricksAccountId),

NetworkName: pulumi.String(fmt.Sprintf("%v-network", prefix)),

VpcId: pulumi.Any(vpcId),

SubnetIds: pulumi.Any(subnetsPrivate),

SecurityGroupIds: pulumi.StringArray{

securityGroup,

},

})

if err != nil {

return err

}

// create workspace in given VPC with DBFS on root bucket

thisMwsWorkspaces, err := databricks.NewMwsWorkspaces(ctx, "this", &databricks.MwsWorkspacesArgs{

AccountId: pulumi.Any(databricksAccountId),

WorkspaceName: pulumi.Any(prefix),

AwsRegion: pulumi.Any(region),

CredentialsId: this.CredentialsId,

StorageConfigurationId: thisMwsStorageConfigurations.StorageConfigurationId,

NetworkId: thisMwsNetworks.NetworkId,

Token: nil,

})

if err != nil {

return err

}

ctx.Export("databricksToken", thisMwsWorkspaces.Token.ApplyT(func(token databricks.MwsWorkspacesToken) (*string, error) {

return &token.TokenValue, nil

}).(pulumi.StringPtrOutput))

return nil

})

}

using System.Collections.Generic;

using System.Linq;

using Pulumi;

using Databricks = Pulumi.Databricks;

return await Deployment.RunAsync(() =>

{

var config = new Config();

// Account ID that can be found in the dropdown under the email address in the upper-right corner of https://accounts.cloud.databricks.com/

var databricksAccountId = config.RequireObject<dynamic>("databricksAccountId");

// register cross-account ARN

var @this = new Databricks.MwsCredentials("this", new()

{

AccountId = databricksAccountId,

CredentialsName = $"{prefix}-creds",

RoleArn = crossaccountArn,

});

// register root bucket

var thisMwsStorageConfigurations = new Databricks.MwsStorageConfigurations("this", new()

{

AccountId = databricksAccountId,

StorageConfigurationName = $"{prefix}-storage",

BucketName = rootBucket,

});

// register VPC

var thisMwsNetworks = new Databricks.MwsNetworks("this", new()

{

AccountId = databricksAccountId,

NetworkName = $"{prefix}-network",

VpcId = vpcId,

SubnetIds = subnetsPrivate,

SecurityGroupIds = new[]

{

securityGroup,

},

});

// create workspace in given VPC with DBFS on root bucket

var thisMwsWorkspaces = new Databricks.MwsWorkspaces("this", new()

{

AccountId = databricksAccountId,

WorkspaceName = prefix,

AwsRegion = region,

CredentialsId = @this.CredentialsId,

StorageConfigurationId = thisMwsStorageConfigurations.StorageConfigurationId,

NetworkId = thisMwsNetworks.NetworkId,

Token = null,

});

return new Dictionary<string, object?>

{

["databricksToken"] = thisMwsWorkspaces.Token.Apply(token => token?.TokenValue),

};

});

package generated_program;

import com.pulumi.Context;

import com.pulumi.Pulumi;

import com.pulumi.core.Output;

import com.pulumi.databricks.MwsCredentials;

import com.pulumi.databricks.MwsCredentialsArgs;

import com.pulumi.databricks.MwsStorageConfigurations;

import com.pulumi.databricks.MwsStorageConfigurationsArgs;

import com.pulumi.databricks.MwsNetworks;

import com.pulumi.databricks.MwsNetworksArgs;

import com.pulumi.databricks.MwsWorkspaces;

import com.pulumi.databricks.MwsWorkspacesArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesTokenArgs;

import java.util.List;

import java.util.ArrayList;

import java.util.Map;

import java.io.File;

import java.nio.file.Files;

import java.nio.file.Paths;

public class App {

public static void main(String[] args) {

Pulumi.run(App::stack);

}

public static void stack(Context ctx) {

final var config = ctx.config();

final var databricksAccountId = config.get("databricksAccountId");

// register cross-account ARN

var this_ = new MwsCredentials("this", MwsCredentialsArgs.builder()

.accountId(databricksAccountId)

.credentialsName(String.format("%s-creds", prefix))

.roleArn(crossaccountArn)

.build());

// register root bucket

var thisMwsStorageConfigurations = new MwsStorageConfigurations("thisMwsStorageConfigurations", MwsStorageConfigurationsArgs.builder()

.accountId(databricksAccountId)

.storageConfigurationName(String.format("%s-storage", prefix))

.bucketName(rootBucket)

.build());

// register VPC

var thisMwsNetworks = new MwsNetworks("thisMwsNetworks", MwsNetworksArgs.builder()

.accountId(databricksAccountId)

.networkName(String.format("%s-network", prefix))

.vpcId(vpcId)

.subnetIds(subnetsPrivate)

.securityGroupIds(securityGroup)

.build());

// create workspace in given VPC with DBFS on root bucket

var thisMwsWorkspaces = new MwsWorkspaces("thisMwsWorkspaces", MwsWorkspacesArgs.builder()

.accountId(databricksAccountId)

.workspaceName(prefix)

.awsRegion(region)

.credentialsId(this_.credentialsId())

.storageConfigurationId(thisMwsStorageConfigurations.storageConfigurationId())

.networkId(thisMwsNetworks.networkId())

.token()

.build());

ctx.export("databricksToken", thisMwsWorkspaces.token().applyValue(token -> token.tokenValue()));

}

}

configuration:

databricksAccountId:

type: dynamic

resources:

# register cross-account ARN

this:

type: databricks:MwsCredentials

properties:

accountId: ${databricksAccountId}

credentialsName: ${prefix}-creds

roleArn: ${crossaccountArn}

# register root bucket

thisMwsStorageConfigurations:

type: databricks:MwsStorageConfigurations

name: this

properties:

accountId: ${databricksAccountId}

storageConfigurationName: ${prefix}-storage

bucketName: ${rootBucket}

# register VPC

thisMwsNetworks:

type: databricks:MwsNetworks

name: this

properties:

accountId: ${databricksAccountId}

networkName: ${prefix}-network

vpcId: ${vpcId}

subnetIds: ${subnetsPrivate}

securityGroupIds:

- ${securityGroup}

# create workspace in given VPC with DBFS on root bucket

thisMwsWorkspaces:

type: databricks:MwsWorkspaces

name: this

properties:

accountId: ${databricksAccountId}

workspaceName: ${prefix}

awsRegion: ${region}

credentialsId: ${this.credentialsId}

storageConfigurationId: ${thisMwsStorageConfigurations.storageConfigurationId}

networkId: ${thisMwsNetworks.networkId}

token: {}

outputs:

databricksToken: ${thisMwsWorkspaces.token.tokenValue}

Creating a Databricks on AWS workspace with Databricks-Managed VPC

By default, Databricks creates a VPC in your AWS account for each workspace. Databricks uses it for running clusters in the workspace. Optionally, you can use your VPC for the workspace, using the feature customer-managed VPC. Databricks recommends that you provide your VPC with databricks.MwsNetworks so that you can configure it according to your organization’s enterprise cloud standards while still conforming to Databricks requirements. You cannot migrate an existing workspace to your VPC. Please see the difference described through IAM policy actions on this page.

import * as pulumi from "@pulumi/pulumi";

import * as aws from "@pulumi/aws";

import * as databricks from "@pulumi/databricks";

import * as random from "@pulumi/random";

const config = new pulumi.Config();

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

const databricksAccountId = config.requireObject("databricksAccountId");

const naming = new random.index.String("naming", {

special: false,

upper: false,

length: 6,

});

const prefix = `dltp${naming.result}`;

const this = databricks.getAwsAssumeRolePolicy({

externalId: databricksAccountId,

});

const crossAccountRole = new aws.iam.Role("cross_account_role", {

name: `${prefix}-crossaccount`,

assumeRolePolicy: _this.then(_this => _this.json),

tags: tags,

});

const thisGetAwsCrossAccountPolicy = databricks.getAwsCrossAccountPolicy({});

const thisRolePolicy = new aws.iam.RolePolicy("this", {

name: `${prefix}-policy`,

role: crossAccountRole.id,

policy: thisGetAwsCrossAccountPolicy.then(thisGetAwsCrossAccountPolicy => thisGetAwsCrossAccountPolicy.json),

});

const thisMwsCredentials = new databricks.MwsCredentials("this", {

accountId: databricksAccountId,

credentialsName: `${prefix}-creds`,

roleArn: crossAccountRole.arn,

});

const rootStorageBucket = new aws.s3.BucketV2("root_storage_bucket", {

bucket: `${prefix}-rootbucket`,

acl: "private",

forceDestroy: true,

tags: tags,

});

const rootVersioning = new aws.s3.BucketVersioningV2("root_versioning", {

bucket: rootStorageBucket.id,

versioningConfiguration: {

status: "Disabled",

},

});

const rootStorageBucketBucketServerSideEncryptionConfigurationV2 = new aws.s3.BucketServerSideEncryptionConfigurationV2("root_storage_bucket", {

bucket: rootStorageBucket.bucket,

rules: [{

applyServerSideEncryptionByDefault: {

sseAlgorithm: "AES256",

},

}],

});

const rootStorageBucketBucketPublicAccessBlock = new aws.s3.BucketPublicAccessBlock("root_storage_bucket", {

bucket: rootStorageBucket.id,

blockPublicAcls: true,

blockPublicPolicy: true,

ignorePublicAcls: true,

restrictPublicBuckets: true,

}, {

dependsOn: [rootStorageBucket],

});

const thisGetAwsBucketPolicy = databricks.getAwsBucketPolicyOutput({

bucket: rootStorageBucket.bucket,

});

const rootBucketPolicy = new aws.s3.BucketPolicy("root_bucket_policy", {

bucket: rootStorageBucket.id,

policy: thisGetAwsBucketPolicy.apply(thisGetAwsBucketPolicy => thisGetAwsBucketPolicy.json),

}, {

dependsOn: [rootStorageBucketBucketPublicAccessBlock],

});

const thisMwsStorageConfigurations = new databricks.MwsStorageConfigurations("this", {

accountId: databricksAccountId,

storageConfigurationName: `${prefix}-storage`,

bucketName: rootStorageBucket.bucket,

});

const thisMwsWorkspaces = new databricks.MwsWorkspaces("this", {

accountId: databricksAccountId,

workspaceName: prefix,

awsRegion: "us-east-1",

credentialsId: thisMwsCredentials.credentialsId,

storageConfigurationId: thisMwsStorageConfigurations.storageConfigurationId,

token: {},

customTags: {

SoldToCode: "1234",

},

});

export const databricksToken = thisMwsWorkspaces.token.apply(token => token?.tokenValue);

import pulumi

import pulumi_aws as aws

import pulumi_databricks as databricks

import pulumi_random as random

config = pulumi.Config()

# Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

databricks_account_id = config.require_object("databricksAccountId")

naming = random.index.String("naming",

special=False,

upper=False,

length=6)

prefix = f"dltp{naming['result']}"

this = databricks.get_aws_assume_role_policy(external_id=databricks_account_id)

cross_account_role = aws.iam.Role("cross_account_role",

name=f"{prefix}-crossaccount",

assume_role_policy=this.json,

tags=tags)

this_get_aws_cross_account_policy = databricks.get_aws_cross_account_policy()

this_role_policy = aws.iam.RolePolicy("this",

name=f"{prefix}-policy",

role=cross_account_role.id,

policy=this_get_aws_cross_account_policy.json)

this_mws_credentials = databricks.MwsCredentials("this",

account_id=databricks_account_id,

credentials_name=f"{prefix}-creds",

role_arn=cross_account_role.arn)

root_storage_bucket = aws.s3.BucketV2("root_storage_bucket",

bucket=f"{prefix}-rootbucket",

acl="private",

force_destroy=True,

tags=tags)

root_versioning = aws.s3.BucketVersioningV2("root_versioning",

bucket=root_storage_bucket.id,

versioning_configuration=aws.s3.BucketVersioningV2VersioningConfigurationArgs(

status="Disabled",

))

root_storage_bucket_bucket_server_side_encryption_configuration_v2 = aws.s3.BucketServerSideEncryptionConfigurationV2("root_storage_bucket",

bucket=root_storage_bucket.bucket,

rules=[aws.s3.BucketServerSideEncryptionConfigurationV2RuleArgs(

apply_server_side_encryption_by_default=aws.s3.BucketServerSideEncryptionConfigurationV2RuleApplyServerSideEncryptionByDefaultArgs(

sse_algorithm="AES256",

),

)])

root_storage_bucket_bucket_public_access_block = aws.s3.BucketPublicAccessBlock("root_storage_bucket",

bucket=root_storage_bucket.id,

block_public_acls=True,

block_public_policy=True,

ignore_public_acls=True,

restrict_public_buckets=True,

opts = pulumi.ResourceOptions(depends_on=[root_storage_bucket]))

this_get_aws_bucket_policy = databricks.get_aws_bucket_policy_output(bucket=root_storage_bucket.bucket)

root_bucket_policy = aws.s3.BucketPolicy("root_bucket_policy",

bucket=root_storage_bucket.id,

policy=this_get_aws_bucket_policy.json,

opts = pulumi.ResourceOptions(depends_on=[root_storage_bucket_bucket_public_access_block]))

this_mws_storage_configurations = databricks.MwsStorageConfigurations("this",

account_id=databricks_account_id,

storage_configuration_name=f"{prefix}-storage",

bucket_name=root_storage_bucket.bucket)

this_mws_workspaces = databricks.MwsWorkspaces("this",

account_id=databricks_account_id,

workspace_name=prefix,

aws_region="us-east-1",

credentials_id=this_mws_credentials.credentials_id,

storage_configuration_id=this_mws_storage_configurations.storage_configuration_id,

token=databricks.MwsWorkspacesTokenArgs(),

custom_tags={

"SoldToCode": "1234",

})

pulumi.export("databricksToken", this_mws_workspaces.token.token_value)

package main

import (

"fmt"

"github.com/pulumi/pulumi-aws/sdk/v6/go/aws/iam"

"github.com/pulumi/pulumi-aws/sdk/v6/go/aws/s3"

"github.com/pulumi/pulumi-databricks/sdk/go/databricks"

"github.com/pulumi/pulumi-random/sdk/v4/go/random"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi/config"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

cfg := config.New(ctx, "")

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

databricksAccountId := cfg.RequireObject("databricksAccountId")

naming, err := random.NewString(ctx, "naming", &random.StringArgs{

Special: false,

Upper: false,

Length: 6,

})

if err != nil {

return err

}

prefix := fmt.Sprintf("dltp%v", naming.Result)

this, err := databricks.GetAwsAssumeRolePolicy(ctx, &databricks.GetAwsAssumeRolePolicyArgs{

ExternalId: databricksAccountId,

}, nil)

if err != nil {

return err

}

crossAccountRole, err := iam.NewRole(ctx, "cross_account_role", &iam.RoleArgs{

Name: pulumi.String(fmt.Sprintf("%v-crossaccount", prefix)),

AssumeRolePolicy: pulumi.String(this.Json),

Tags: pulumi.Any(tags),

})

if err != nil {

return err

}

thisGetAwsCrossAccountPolicy, err := databricks.GetAwsCrossAccountPolicy(ctx, nil, nil)

if err != nil {

return err

}

_, err = iam.NewRolePolicy(ctx, "this", &iam.RolePolicyArgs{

Name: pulumi.String(fmt.Sprintf("%v-policy", prefix)),

Role: crossAccountRole.ID(),

Policy: pulumi.String(thisGetAwsCrossAccountPolicy.Json),

})

if err != nil {

return err

}

thisMwsCredentials, err := databricks.NewMwsCredentials(ctx, "this", &databricks.MwsCredentialsArgs{

AccountId: pulumi.Any(databricksAccountId),

CredentialsName: pulumi.String(fmt.Sprintf("%v-creds", prefix)),

RoleArn: crossAccountRole.Arn,

})

if err != nil {

return err

}

rootStorageBucket, err := s3.NewBucketV2(ctx, "root_storage_bucket", &s3.BucketV2Args{

Bucket: pulumi.String(fmt.Sprintf("%v-rootbucket", prefix)),

Acl: pulumi.String("private"),

ForceDestroy: pulumi.Bool(true),

Tags: pulumi.Any(tags),

})

if err != nil {

return err

}

_, err = s3.NewBucketVersioningV2(ctx, "root_versioning", &s3.BucketVersioningV2Args{

Bucket: rootStorageBucket.ID(),

VersioningConfiguration: &s3.BucketVersioningV2VersioningConfigurationArgs{

Status: pulumi.String("Disabled"),

},

})

if err != nil {

return err

}

_, err = s3.NewBucketServerSideEncryptionConfigurationV2(ctx, "root_storage_bucket", &s3.BucketServerSideEncryptionConfigurationV2Args{

Bucket: rootStorageBucket.Bucket,

Rules: s3.BucketServerSideEncryptionConfigurationV2RuleArray{

&s3.BucketServerSideEncryptionConfigurationV2RuleArgs{

ApplyServerSideEncryptionByDefault: &s3.BucketServerSideEncryptionConfigurationV2RuleApplyServerSideEncryptionByDefaultArgs{

SseAlgorithm: pulumi.String("AES256"),

},

},

},

})

if err != nil {

return err

}

rootStorageBucketBucketPublicAccessBlock, err := s3.NewBucketPublicAccessBlock(ctx, "root_storage_bucket", &s3.BucketPublicAccessBlockArgs{

Bucket: rootStorageBucket.ID(),

BlockPublicAcls: pulumi.Bool(true),

BlockPublicPolicy: pulumi.Bool(true),

IgnorePublicAcls: pulumi.Bool(true),

RestrictPublicBuckets: pulumi.Bool(true),

}, pulumi.DependsOn([]pulumi.Resource{

rootStorageBucket,

}))

if err != nil {

return err

}

thisGetAwsBucketPolicy := databricks.GetAwsBucketPolicyOutput(ctx, databricks.GetAwsBucketPolicyOutputArgs{

Bucket: rootStorageBucket.Bucket,

}, nil)

_, err = s3.NewBucketPolicy(ctx, "root_bucket_policy", &s3.BucketPolicyArgs{

Bucket: rootStorageBucket.ID(),

Policy: thisGetAwsBucketPolicy.ApplyT(func(thisGetAwsBucketPolicy databricks.GetAwsBucketPolicyResult) (*string, error) {

return &thisGetAwsBucketPolicy.Json, nil

}).(pulumi.StringPtrOutput),

}, pulumi.DependsOn([]pulumi.Resource{

rootStorageBucketBucketPublicAccessBlock,

}))

if err != nil {

return err

}

thisMwsStorageConfigurations, err := databricks.NewMwsStorageConfigurations(ctx, "this", &databricks.MwsStorageConfigurationsArgs{

AccountId: pulumi.Any(databricksAccountId),

StorageConfigurationName: pulumi.String(fmt.Sprintf("%v-storage", prefix)),

BucketName: rootStorageBucket.Bucket,

})

if err != nil {

return err

}

thisMwsWorkspaces, err := databricks.NewMwsWorkspaces(ctx, "this", &databricks.MwsWorkspacesArgs{

AccountId: pulumi.Any(databricksAccountId),

WorkspaceName: pulumi.String(prefix),

AwsRegion: pulumi.String("us-east-1"),

CredentialsId: thisMwsCredentials.CredentialsId,

StorageConfigurationId: thisMwsStorageConfigurations.StorageConfigurationId,

Token: nil,

CustomTags: pulumi.Map{

"SoldToCode": pulumi.Any("1234"),

},

})

if err != nil {

return err

}

ctx.Export("databricksToken", thisMwsWorkspaces.Token.ApplyT(func(token databricks.MwsWorkspacesToken) (*string, error) {

return &token.TokenValue, nil

}).(pulumi.StringPtrOutput))

return nil

})

}

using System.Collections.Generic;

using System.Linq;

using Pulumi;

using Aws = Pulumi.Aws;

using Databricks = Pulumi.Databricks;

using Random = Pulumi.Random;

return await Deployment.RunAsync(() =>

{

var config = new Config();

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

var databricksAccountId = config.RequireObject<dynamic>("databricksAccountId");

var naming = new Random.Index.String("naming", new()

{

Special = false,

Upper = false,

Length = 6,

});

var prefix = $"dltp{naming.Result}";

var @this = Databricks.GetAwsAssumeRolePolicy.Invoke(new()

{

ExternalId = databricksAccountId,

});

var crossAccountRole = new Aws.Iam.Role("cross_account_role", new()

{

Name = $"{prefix}-crossaccount",

AssumeRolePolicy = @this.Apply(@this => @this.Apply(getAwsAssumeRolePolicyResult => getAwsAssumeRolePolicyResult.Json)),

Tags = tags,

});

var thisGetAwsCrossAccountPolicy = Databricks.GetAwsCrossAccountPolicy.Invoke();

var thisRolePolicy = new Aws.Iam.RolePolicy("this", new()

{

Name = $"{prefix}-policy",

Role = crossAccountRole.Id,

Policy = thisGetAwsCrossAccountPolicy.Apply(getAwsCrossAccountPolicyResult => getAwsCrossAccountPolicyResult.Json),

});

var thisMwsCredentials = new Databricks.MwsCredentials("this", new()

{

AccountId = databricksAccountId,

CredentialsName = $"{prefix}-creds",

RoleArn = crossAccountRole.Arn,

});

var rootStorageBucket = new Aws.S3.BucketV2("root_storage_bucket", new()

{

Bucket = $"{prefix}-rootbucket",

Acl = "private",

ForceDestroy = true,

Tags = tags,

});

var rootVersioning = new Aws.S3.BucketVersioningV2("root_versioning", new()

{

Bucket = rootStorageBucket.Id,

VersioningConfiguration = new Aws.S3.Inputs.BucketVersioningV2VersioningConfigurationArgs

{

Status = "Disabled",

},

});

var rootStorageBucketBucketServerSideEncryptionConfigurationV2 = new Aws.S3.BucketServerSideEncryptionConfigurationV2("root_storage_bucket", new()

{

Bucket = rootStorageBucket.Bucket,

Rules = new[]

{

new Aws.S3.Inputs.BucketServerSideEncryptionConfigurationV2RuleArgs

{

ApplyServerSideEncryptionByDefault = new Aws.S3.Inputs.BucketServerSideEncryptionConfigurationV2RuleApplyServerSideEncryptionByDefaultArgs

{

SseAlgorithm = "AES256",

},

},

},

});

var rootStorageBucketBucketPublicAccessBlock = new Aws.S3.BucketPublicAccessBlock("root_storage_bucket", new()

{

Bucket = rootStorageBucket.Id,

BlockPublicAcls = true,

BlockPublicPolicy = true,

IgnorePublicAcls = true,

RestrictPublicBuckets = true,

}, new CustomResourceOptions

{

DependsOn =

{

rootStorageBucket,

},

});

var thisGetAwsBucketPolicy = Databricks.GetAwsBucketPolicy.Invoke(new()

{

Bucket = rootStorageBucket.Bucket,

});

var rootBucketPolicy = new Aws.S3.BucketPolicy("root_bucket_policy", new()

{

Bucket = rootStorageBucket.Id,

Policy = thisGetAwsBucketPolicy.Apply(getAwsBucketPolicyResult => getAwsBucketPolicyResult.Json),

}, new CustomResourceOptions

{

DependsOn =

{

rootStorageBucketBucketPublicAccessBlock,

},

});

var thisMwsStorageConfigurations = new Databricks.MwsStorageConfigurations("this", new()

{

AccountId = databricksAccountId,

StorageConfigurationName = $"{prefix}-storage",

BucketName = rootStorageBucket.Bucket,

});

var thisMwsWorkspaces = new Databricks.MwsWorkspaces("this", new()

{

AccountId = databricksAccountId,

WorkspaceName = prefix,

AwsRegion = "us-east-1",

CredentialsId = thisMwsCredentials.CredentialsId,

StorageConfigurationId = thisMwsStorageConfigurations.StorageConfigurationId,

Token = null,

CustomTags =

{

{ "SoldToCode", "1234" },

},

});

return new Dictionary<string, object?>

{

["databricksToken"] = thisMwsWorkspaces.Token.Apply(token => token?.TokenValue),

};

});

package generated_program;

import com.pulumi.Context;

import com.pulumi.Pulumi;

import com.pulumi.core.Output;

import com.pulumi.random.string;

import com.pulumi.random.StringArgs;

import com.pulumi.databricks.DatabricksFunctions;

import com.pulumi.databricks.inputs.GetAwsAssumeRolePolicyArgs;

import com.pulumi.aws.iam.Role;

import com.pulumi.aws.iam.RoleArgs;

import com.pulumi.databricks.inputs.GetAwsCrossAccountPolicyArgs;

import com.pulumi.aws.iam.RolePolicy;

import com.pulumi.aws.iam.RolePolicyArgs;

import com.pulumi.databricks.MwsCredentials;

import com.pulumi.databricks.MwsCredentialsArgs;

import com.pulumi.aws.s3.BucketV2;

import com.pulumi.aws.s3.BucketV2Args;

import com.pulumi.aws.s3.BucketVersioningV2;

import com.pulumi.aws.s3.BucketVersioningV2Args;

import com.pulumi.aws.s3.inputs.BucketVersioningV2VersioningConfigurationArgs;

import com.pulumi.aws.s3.BucketServerSideEncryptionConfigurationV2;

import com.pulumi.aws.s3.BucketServerSideEncryptionConfigurationV2Args;

import com.pulumi.aws.s3.inputs.BucketServerSideEncryptionConfigurationV2RuleArgs;

import com.pulumi.aws.s3.inputs.BucketServerSideEncryptionConfigurationV2RuleApplyServerSideEncryptionByDefaultArgs;

import com.pulumi.aws.s3.BucketPublicAccessBlock;

import com.pulumi.aws.s3.BucketPublicAccessBlockArgs;

import com.pulumi.databricks.inputs.GetAwsBucketPolicyArgs;

import com.pulumi.aws.s3.BucketPolicy;

import com.pulumi.aws.s3.BucketPolicyArgs;

import com.pulumi.databricks.MwsStorageConfigurations;

import com.pulumi.databricks.MwsStorageConfigurationsArgs;

import com.pulumi.databricks.MwsWorkspaces;

import com.pulumi.databricks.MwsWorkspacesArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesTokenArgs;

import com.pulumi.resources.CustomResourceOptions;

import java.util.List;

import java.util.ArrayList;

import java.util.Map;

import java.io.File;

import java.nio.file.Files;

import java.nio.file.Paths;

public class App {

public static void main(String[] args) {

Pulumi.run(App::stack);

}

public static void stack(Context ctx) {

final var config = ctx.config();

final var databricksAccountId = config.get("databricksAccountId");

var naming = new String("naming", StringArgs.builder()

.special(false)

.upper(false)

.length(6)

.build());

final var prefix = String.format("dltp%s", naming.result());

final var this = DatabricksFunctions.getAwsAssumeRolePolicy(GetAwsAssumeRolePolicyArgs.builder()

.externalId(databricksAccountId)

.build());

var crossAccountRole = new Role("crossAccountRole", RoleArgs.builder()

.name(String.format("%s-crossaccount", prefix))

.assumeRolePolicy(this_.json())

.tags(tags)

.build());

final var thisGetAwsCrossAccountPolicy = DatabricksFunctions.getAwsCrossAccountPolicy();

var thisRolePolicy = new RolePolicy("thisRolePolicy", RolePolicyArgs.builder()

.name(String.format("%s-policy", prefix))

.role(crossAccountRole.id())

.policy(thisGetAwsCrossAccountPolicy.applyValue(getAwsCrossAccountPolicyResult -> getAwsCrossAccountPolicyResult.json()))

.build());

var thisMwsCredentials = new MwsCredentials("thisMwsCredentials", MwsCredentialsArgs.builder()

.accountId(databricksAccountId)

.credentialsName(String.format("%s-creds", prefix))

.roleArn(crossAccountRole.arn())

.build());

var rootStorageBucket = new BucketV2("rootStorageBucket", BucketV2Args.builder()

.bucket(String.format("%s-rootbucket", prefix))

.acl("private")

.forceDestroy(true)

.tags(tags)

.build());

var rootVersioning = new BucketVersioningV2("rootVersioning", BucketVersioningV2Args.builder()

.bucket(rootStorageBucket.id())

.versioningConfiguration(BucketVersioningV2VersioningConfigurationArgs.builder()

.status("Disabled")

.build())

.build());

var rootStorageBucketBucketServerSideEncryptionConfigurationV2 = new BucketServerSideEncryptionConfigurationV2("rootStorageBucketBucketServerSideEncryptionConfigurationV2", BucketServerSideEncryptionConfigurationV2Args.builder()

.bucket(rootStorageBucket.bucket())

.rules(BucketServerSideEncryptionConfigurationV2RuleArgs.builder()

.applyServerSideEncryptionByDefault(BucketServerSideEncryptionConfigurationV2RuleApplyServerSideEncryptionByDefaultArgs.builder()

.sseAlgorithm("AES256")

.build())

.build())

.build());

var rootStorageBucketBucketPublicAccessBlock = new BucketPublicAccessBlock("rootStorageBucketBucketPublicAccessBlock", BucketPublicAccessBlockArgs.builder()

.bucket(rootStorageBucket.id())

.blockPublicAcls(true)

.blockPublicPolicy(true)

.ignorePublicAcls(true)

.restrictPublicBuckets(true)

.build(), CustomResourceOptions.builder()

.dependsOn(rootStorageBucket)

.build());

final var thisGetAwsBucketPolicy = DatabricksFunctions.getAwsBucketPolicy(GetAwsBucketPolicyArgs.builder()

.bucket(rootStorageBucket.bucket())

.build());

var rootBucketPolicy = new BucketPolicy("rootBucketPolicy", BucketPolicyArgs.builder()

.bucket(rootStorageBucket.id())

.policy(thisGetAwsBucketPolicy.applyValue(getAwsBucketPolicyResult -> getAwsBucketPolicyResult).applyValue(thisGetAwsBucketPolicy -> thisGetAwsBucketPolicy.applyValue(getAwsBucketPolicyResult -> getAwsBucketPolicyResult.json())))

.build(), CustomResourceOptions.builder()

.dependsOn(rootStorageBucketBucketPublicAccessBlock)

.build());

var thisMwsStorageConfigurations = new MwsStorageConfigurations("thisMwsStorageConfigurations", MwsStorageConfigurationsArgs.builder()

.accountId(databricksAccountId)

.storageConfigurationName(String.format("%s-storage", prefix))

.bucketName(rootStorageBucket.bucket())

.build());

var thisMwsWorkspaces = new MwsWorkspaces("thisMwsWorkspaces", MwsWorkspacesArgs.builder()

.accountId(databricksAccountId)

.workspaceName(prefix)

.awsRegion("us-east-1")

.credentialsId(thisMwsCredentials.credentialsId())

.storageConfigurationId(thisMwsStorageConfigurations.storageConfigurationId())

.token()

.customTags(Map.of("SoldToCode", "1234"))

.build());

ctx.export("databricksToken", thisMwsWorkspaces.token().applyValue(token -> token.tokenValue()));

}

}

configuration:

databricksAccountId:

type: dynamic

resources:

naming:

type: random:string

properties:

special: false

upper: false

length: 6

crossAccountRole:

type: aws:iam:Role

name: cross_account_role

properties:

name: ${prefix}-crossaccount

assumeRolePolicy: ${this.json}

tags: ${tags}

thisRolePolicy:

type: aws:iam:RolePolicy

name: this

properties:

name: ${prefix}-policy

role: ${crossAccountRole.id}

policy: ${thisGetAwsCrossAccountPolicy.json}

thisMwsCredentials:

type: databricks:MwsCredentials

name: this

properties:

accountId: ${databricksAccountId}

credentialsName: ${prefix}-creds

roleArn: ${crossAccountRole.arn}

rootStorageBucket:

type: aws:s3:BucketV2

name: root_storage_bucket

properties:

bucket: ${prefix}-rootbucket

acl: private

forceDestroy: true

tags: ${tags}

rootVersioning:

type: aws:s3:BucketVersioningV2

name: root_versioning

properties:

bucket: ${rootStorageBucket.id}

versioningConfiguration:

status: Disabled

rootStorageBucketBucketServerSideEncryptionConfigurationV2:

type: aws:s3:BucketServerSideEncryptionConfigurationV2

name: root_storage_bucket

properties:

bucket: ${rootStorageBucket.bucket}

rules:

- applyServerSideEncryptionByDefault:

sseAlgorithm: AES256

rootStorageBucketBucketPublicAccessBlock:

type: aws:s3:BucketPublicAccessBlock

name: root_storage_bucket

properties:

bucket: ${rootStorageBucket.id}

blockPublicAcls: true

blockPublicPolicy: true

ignorePublicAcls: true

restrictPublicBuckets: true

options:

dependson:

- ${rootStorageBucket}

rootBucketPolicy:

type: aws:s3:BucketPolicy

name: root_bucket_policy

properties:

bucket: ${rootStorageBucket.id}

policy: ${thisGetAwsBucketPolicy.json}

options:

dependson:

- ${rootStorageBucketBucketPublicAccessBlock}

thisMwsStorageConfigurations:

type: databricks:MwsStorageConfigurations

name: this

properties:

accountId: ${databricksAccountId}

storageConfigurationName: ${prefix}-storage

bucketName: ${rootStorageBucket.bucket}

thisMwsWorkspaces:

type: databricks:MwsWorkspaces

name: this

properties:

accountId: ${databricksAccountId}

workspaceName: ${prefix}

awsRegion: us-east-1

credentialsId: ${thisMwsCredentials.credentialsId}

storageConfigurationId: ${thisMwsStorageConfigurations.storageConfigurationId}

token: {}

customTags:

SoldToCode: '1234'

variables:

prefix: dltp${naming.result}

this:

fn::invoke:

Function: databricks:getAwsAssumeRolePolicy

Arguments:

externalId: ${databricksAccountId}

thisGetAwsCrossAccountPolicy:

fn::invoke:

Function: databricks:getAwsCrossAccountPolicy

Arguments: {}

thisGetAwsBucketPolicy:

fn::invoke:

Function: databricks:getAwsBucketPolicy

Arguments:

bucket: ${rootStorageBucket.bucket}

outputs:

databricksToken: ${thisMwsWorkspaces.token.tokenValue}

In order to create a Databricks Workspace that leverages AWS PrivateLink please ensure that you have read and understood the Enable Private Link documentation and then customise the example above with the relevant examples from mws_vpc_endpoint, mws_private_access_settings and mws_networks.

Creating a Databricks on GCP workspace

To get workspace running, you have to configure a network object:

- databricks.MwsNetworks - (optional, but recommended) You can share one customer-managed VPC with multiple workspaces in a single account. You do not have to create a new VPC for each workspace. However, you cannot reuse subnets with other resources, including other workspaces or non-Databricks resources. If you plan to share one VPC with multiple workspaces, be sure to size your VPC and subnets accordingly. Because a Databricks databricks.MwsNetworks encapsulates this information, you cannot reuse it across workspaces.

import * as pulumi from "@pulumi/pulumi";

import * as databricks from "@pulumi/databricks";

const config = new pulumi.Config();

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

const databricksAccountId = config.requireObject("databricksAccountId");

const databricksGoogleServiceAccount = config.requireObject("databricksGoogleServiceAccount");

const googleProject = config.requireObject("googleProject");

// register VPC

const _this = new databricks.MwsNetworks("this", {

accountId: databricksAccountId,

networkName: `${prefix}-network`,

gcpNetworkInfo: {

networkProjectId: googleProject,

vpcId: vpcId,

subnetId: subnetId,

subnetRegion: subnetRegion,

podIpRangeName: "pods",

serviceIpRangeName: "svc",

},

});

// create workspace in given VPC

const thisMwsWorkspaces = new databricks.MwsWorkspaces("this", {

accountId: databricksAccountId,

workspaceName: prefix,

location: subnetRegion,

cloudResourceContainer: {

gcp: {

projectId: googleProject,

},

},

networkId: _this.networkId,

gkeConfig: {

connectivityType: "PRIVATE_NODE_PUBLIC_MASTER",

masterIpRange: "10.3.0.0/28",

},

token: {},

});

export const databricksToken = thisMwsWorkspaces.token.apply(token => token?.tokenValue);

import pulumi

import pulumi_databricks as databricks

config = pulumi.Config()

# Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

databricks_account_id = config.require_object("databricksAccountId")

databricks_google_service_account = config.require_object("databricksGoogleServiceAccount")

google_project = config.require_object("googleProject")

# register VPC

this = databricks.MwsNetworks("this",

account_id=databricks_account_id,

network_name=f"{prefix}-network",

gcp_network_info=databricks.MwsNetworksGcpNetworkInfoArgs(

network_project_id=google_project,

vpc_id=vpc_id,

subnet_id=subnet_id,

subnet_region=subnet_region,

pod_ip_range_name="pods",

service_ip_range_name="svc",

))

# create workspace in given VPC

this_mws_workspaces = databricks.MwsWorkspaces("this",

account_id=databricks_account_id,

workspace_name=prefix,

location=subnet_region,

cloud_resource_container=databricks.MwsWorkspacesCloudResourceContainerArgs(

gcp=databricks.MwsWorkspacesCloudResourceContainerGcpArgs(

project_id=google_project,

),

),

network_id=this.network_id,

gke_config=databricks.MwsWorkspacesGkeConfigArgs(

connectivity_type="PRIVATE_NODE_PUBLIC_MASTER",

master_ip_range="10.3.0.0/28",

),

token=databricks.MwsWorkspacesTokenArgs())

pulumi.export("databricksToken", this_mws_workspaces.token.token_value)

package main

import (

"fmt"

"github.com/pulumi/pulumi-databricks/sdk/go/databricks"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi"

"github.com/pulumi/pulumi/sdk/v3/go/pulumi/config"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

cfg := config.New(ctx, "")

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

databricksAccountId := cfg.RequireObject("databricksAccountId")

databricksGoogleServiceAccount := cfg.RequireObject("databricksGoogleServiceAccount")

googleProject := cfg.RequireObject("googleProject")

// register VPC

this, err := databricks.NewMwsNetworks(ctx, "this", &databricks.MwsNetworksArgs{

AccountId: pulumi.Any(databricksAccountId),

NetworkName: pulumi.String(fmt.Sprintf("%v-network", prefix)),

GcpNetworkInfo: &databricks.MwsNetworksGcpNetworkInfoArgs{

NetworkProjectId: pulumi.Any(googleProject),

VpcId: pulumi.Any(vpcId),

SubnetId: pulumi.Any(subnetId),

SubnetRegion: pulumi.Any(subnetRegion),

PodIpRangeName: pulumi.String("pods"),

ServiceIpRangeName: pulumi.String("svc"),

},

})

if err != nil {

return err

}

// create workspace in given VPC

thisMwsWorkspaces, err := databricks.NewMwsWorkspaces(ctx, "this", &databricks.MwsWorkspacesArgs{

AccountId: pulumi.Any(databricksAccountId),

WorkspaceName: pulumi.Any(prefix),

Location: pulumi.Any(subnetRegion),

CloudResourceContainer: &databricks.MwsWorkspacesCloudResourceContainerArgs{

Gcp: &databricks.MwsWorkspacesCloudResourceContainerGcpArgs{

ProjectId: pulumi.Any(googleProject),

},

},

NetworkId: this.NetworkId,

GkeConfig: &databricks.MwsWorkspacesGkeConfigArgs{

ConnectivityType: pulumi.String("PRIVATE_NODE_PUBLIC_MASTER"),

MasterIpRange: pulumi.String("10.3.0.0/28"),

},

Token: nil,

})

if err != nil {

return err

}

ctx.Export("databricksToken", thisMwsWorkspaces.Token.ApplyT(func(token databricks.MwsWorkspacesToken) (*string, error) {

return &token.TokenValue, nil

}).(pulumi.StringPtrOutput))

return nil

})

}

using System.Collections.Generic;

using System.Linq;

using Pulumi;

using Databricks = Pulumi.Databricks;

return await Deployment.RunAsync(() =>

{

var config = new Config();

// Account Id that could be found in the top right corner of https://accounts.cloud.databricks.com/

var databricksAccountId = config.RequireObject<dynamic>("databricksAccountId");

var databricksGoogleServiceAccount = config.RequireObject<dynamic>("databricksGoogleServiceAccount");

var googleProject = config.RequireObject<dynamic>("googleProject");

// register VPC

var @this = new Databricks.MwsNetworks("this", new()

{

AccountId = databricksAccountId,

NetworkName = $"{prefix}-network",

GcpNetworkInfo = new Databricks.Inputs.MwsNetworksGcpNetworkInfoArgs

{

NetworkProjectId = googleProject,

VpcId = vpcId,

SubnetId = subnetId,

SubnetRegion = subnetRegion,

PodIpRangeName = "pods",

ServiceIpRangeName = "svc",

},

});

// create workspace in given VPC

var thisMwsWorkspaces = new Databricks.MwsWorkspaces("this", new()

{

AccountId = databricksAccountId,

WorkspaceName = prefix,

Location = subnetRegion,

CloudResourceContainer = new Databricks.Inputs.MwsWorkspacesCloudResourceContainerArgs

{

Gcp = new Databricks.Inputs.MwsWorkspacesCloudResourceContainerGcpArgs

{

ProjectId = googleProject,

},

},

NetworkId = @this.NetworkId,

GkeConfig = new Databricks.Inputs.MwsWorkspacesGkeConfigArgs

{

ConnectivityType = "PRIVATE_NODE_PUBLIC_MASTER",

MasterIpRange = "10.3.0.0/28",

},

Token = null,

});

return new Dictionary<string, object?>

{

["databricksToken"] = thisMwsWorkspaces.Token.Apply(token => token?.TokenValue),

};

});

package generated_program;

import com.pulumi.Context;

import com.pulumi.Pulumi;

import com.pulumi.core.Output;

import com.pulumi.databricks.MwsNetworks;

import com.pulumi.databricks.MwsNetworksArgs;

import com.pulumi.databricks.inputs.MwsNetworksGcpNetworkInfoArgs;

import com.pulumi.databricks.MwsWorkspaces;

import com.pulumi.databricks.MwsWorkspacesArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesCloudResourceContainerArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesCloudResourceContainerGcpArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesGkeConfigArgs;

import com.pulumi.databricks.inputs.MwsWorkspacesTokenArgs;

import java.util.List;

import java.util.ArrayList;

import java.util.Map;

import java.io.File;

import java.nio.file.Files;

import java.nio.file.Paths;

public class App {

public static void main(String[] args) {

Pulumi.run(App::stack);

}

public static void stack(Context ctx) {

final var config = ctx.config();

final var databricksAccountId = config.get("databricksAccountId");

final var databricksGoogleServiceAccount = config.get("databricksGoogleServiceAccount");

final var googleProject = config.get("googleProject");

// register VPC

var this_ = new MwsNetworks("this", MwsNetworksArgs.builder()

.accountId(databricksAccountId)

.networkName(String.format("%s-network", prefix))

.gcpNetworkInfo(MwsNetworksGcpNetworkInfoArgs.builder()

.networkProjectId(googleProject)

.vpcId(vpcId)

.subnetId(subnetId)

.subnetRegion(subnetRegion)

.podIpRangeName("pods")

.serviceIpRangeName("svc")

.build())

.build());

// create workspace in given VPC

var thisMwsWorkspaces = new MwsWorkspaces("thisMwsWorkspaces", MwsWorkspacesArgs.builder()

.accountId(databricksAccountId)

.workspaceName(prefix)

.location(subnetRegion)

.cloudResourceContainer(MwsWorkspacesCloudResourceContainerArgs.builder()

.gcp(MwsWorkspacesCloudResourceContainerGcpArgs.builder()

.projectId(googleProject)

.build())

.build())

.networkId(this_.networkId())

.gkeConfig(MwsWorkspacesGkeConfigArgs.builder()

.connectivityType("PRIVATE_NODE_PUBLIC_MASTER")

.masterIpRange("10.3.0.0/28")

.build())

.token()

.build());

ctx.export("databricksToken", thisMwsWorkspaces.token().applyValue(token -> token.tokenValue()));

}

}

configuration:

databricksAccountId:

type: dynamic

databricksGoogleServiceAccount:

type: dynamic

googleProject:

type: dynamic

resources:

# register VPC

this:

type: databricks:MwsNetworks

properties:

accountId: ${databricksAccountId}

networkName: ${prefix}-network

gcpNetworkInfo:

networkProjectId: ${googleProject}

vpcId: ${vpcId}

subnetId: ${subnetId}

subnetRegion: ${subnetRegion}

podIpRangeName: pods

serviceIpRangeName: svc

# create workspace in given VPC

thisMwsWorkspaces:

type: databricks:MwsWorkspaces

name: this

properties:

accountId: ${databricksAccountId}

workspaceName: ${prefix}

location: ${subnetRegion}

cloudResourceContainer:

gcp:

projectId: ${googleProject}

networkId: ${this.networkId}

gkeConfig:

connectivityType: PRIVATE_NODE_PUBLIC_MASTER

masterIpRange: 10.3.0.0/28

token: {}

outputs:

databricksToken: ${thisMwsWorkspaces.token.tokenValue}

In order to create a Databricks Workspace that leverages GCP Private Service Connect please ensure that you have read and understood the Enable Private Service Connect documentation and then customise the example above with the relevant examples from mws_vpc_endpoint, mws_private_access_settings and mws_networks.

Create MwsWorkspaces Resource

Resources are created with functions called constructors. To learn more about declaring and configuring resources, see Resources.

Constructor syntax

new MwsWorkspaces(name: string, args: MwsWorkspacesArgs, opts?: CustomResourceOptions);@overload

def MwsWorkspaces(resource_name: str,

args: MwsWorkspacesArgs,

opts: Optional[ResourceOptions] = None)

@overload

def MwsWorkspaces(resource_name: str,

opts: Optional[ResourceOptions] = None,

account_id: Optional[str] = None,

workspace_name: Optional[str] = None,

is_no_public_ip_enabled: Optional[bool] = None,

workspace_status: Optional[str] = None,

creation_time: Optional[int] = None,

credentials_id: Optional[str] = None,

custom_tags: Optional[Mapping[str, Any]] = None,

customer_managed_key_id: Optional[str] = None,

deployment_name: Optional[str] = None,

external_customer_info: Optional[MwsWorkspacesExternalCustomerInfoArgs] = None,

gcp_managed_network_config: Optional[MwsWorkspacesGcpManagedNetworkConfigArgs] = None,

gke_config: Optional[MwsWorkspacesGkeConfigArgs] = None,

workspace_url: Optional[str] = None,

cloud_resource_container: Optional[MwsWorkspacesCloudResourceContainerArgs] = None,

pricing_tier: Optional[str] = None,

network_id: Optional[str] = None,

managed_services_customer_managed_key_id: Optional[str] = None,

private_access_settings_id: Optional[str] = None,

storage_configuration_id: Optional[str] = None,

storage_customer_managed_key_id: Optional[str] = None,

token: Optional[MwsWorkspacesTokenArgs] = None,

workspace_id: Optional[str] = None,

aws_region: Optional[str] = None,

location: Optional[str] = None,

workspace_status_message: Optional[str] = None,

cloud: Optional[str] = None)func NewMwsWorkspaces(ctx *Context, name string, args MwsWorkspacesArgs, opts ...ResourceOption) (*MwsWorkspaces, error)public MwsWorkspaces(string name, MwsWorkspacesArgs args, CustomResourceOptions? opts = null)

public MwsWorkspaces(String name, MwsWorkspacesArgs args)

public MwsWorkspaces(String name, MwsWorkspacesArgs args, CustomResourceOptions options)

type: databricks:MwsWorkspaces

properties: # The arguments to resource properties.

options: # Bag of options to control resource's behavior.

Parameters

- name string

- The unique name of the resource.

- args MwsWorkspacesArgs

- The arguments to resource properties.

- opts CustomResourceOptions

- Bag of options to control resource's behavior.

- resource_name str

- The unique name of the resource.

- args MwsWorkspacesArgs

- The arguments to resource properties.

- opts ResourceOptions

- Bag of options to control resource's behavior.

- ctx Context

- Context object for the current deployment.

- name string

- The unique name of the resource.

- args MwsWorkspacesArgs

- The arguments to resource properties.

- opts ResourceOption

- Bag of options to control resource's behavior.

- name string

- The unique name of the resource.

- args MwsWorkspacesArgs

- The arguments to resource properties.

- opts CustomResourceOptions

- Bag of options to control resource's behavior.

- name String

- The unique name of the resource.

- args MwsWorkspacesArgs

- The arguments to resource properties.

- options CustomResourceOptions

- Bag of options to control resource's behavior.

Constructor example

The following reference example uses placeholder values for all input properties.

var mwsWorkspacesResource = new Databricks.MwsWorkspaces("mwsWorkspacesResource", new()

{

AccountId = "string",

WorkspaceName = "string",

IsNoPublicIpEnabled = false,

WorkspaceStatus = "string",

CreationTime = 0,

CredentialsId = "string",

CustomTags =

{

{ "string", "any" },

},

DeploymentName = "string",

ExternalCustomerInfo = new Databricks.Inputs.MwsWorkspacesExternalCustomerInfoArgs

{

AuthoritativeUserEmail = "string",

AuthoritativeUserFullName = "string",

CustomerName = "string",

},

GcpManagedNetworkConfig = new Databricks.Inputs.MwsWorkspacesGcpManagedNetworkConfigArgs

{

GkeClusterPodIpRange = "string",

GkeClusterServiceIpRange = "string",

SubnetCidr = "string",

},

GkeConfig = new Databricks.Inputs.MwsWorkspacesGkeConfigArgs

{

ConnectivityType = "string",

MasterIpRange = "string",

},

WorkspaceUrl = "string",

CloudResourceContainer = new Databricks.Inputs.MwsWorkspacesCloudResourceContainerArgs

{

Gcp = new Databricks.Inputs.MwsWorkspacesCloudResourceContainerGcpArgs

{

ProjectId = "string",

},

},

PricingTier = "string",

NetworkId = "string",

ManagedServicesCustomerManagedKeyId = "string",

PrivateAccessSettingsId = "string",

StorageConfigurationId = "string",

StorageCustomerManagedKeyId = "string",

Token = new Databricks.Inputs.MwsWorkspacesTokenArgs

{

Comment = "string",

LifetimeSeconds = 0,

TokenId = "string",

TokenValue = "string",

},

WorkspaceId = "string",

AwsRegion = "string",

Location = "string",

WorkspaceStatusMessage = "string",

Cloud = "string",

});

example, err := databricks.NewMwsWorkspaces(ctx, "mwsWorkspacesResource", &databricks.MwsWorkspacesArgs{

AccountId: pulumi.String("string"),

WorkspaceName: pulumi.String("string"),

IsNoPublicIpEnabled: pulumi.Bool(false),

WorkspaceStatus: pulumi.String("string"),

CreationTime: pulumi.Int(0),

CredentialsId: pulumi.String("string"),

CustomTags: pulumi.Map{

"string": pulumi.Any("any"),

},

DeploymentName: pulumi.String("string"),

ExternalCustomerInfo: &databricks.MwsWorkspacesExternalCustomerInfoArgs{

AuthoritativeUserEmail: pulumi.String("string"),

AuthoritativeUserFullName: pulumi.String("string"),

CustomerName: pulumi.String("string"),

},

GcpManagedNetworkConfig: &databricks.MwsWorkspacesGcpManagedNetworkConfigArgs{

GkeClusterPodIpRange: pulumi.String("string"),

GkeClusterServiceIpRange: pulumi.String("string"),

SubnetCidr: pulumi.String("string"),

},

GkeConfig: &databricks.MwsWorkspacesGkeConfigArgs{

ConnectivityType: pulumi.String("string"),

MasterIpRange: pulumi.String("string"),

},

WorkspaceUrl: pulumi.String("string"),

CloudResourceContainer: &databricks.MwsWorkspacesCloudResourceContainerArgs{

Gcp: &databricks.MwsWorkspacesCloudResourceContainerGcpArgs{

ProjectId: pulumi.String("string"),

},

},

PricingTier: pulumi.String("string"),

NetworkId: pulumi.String("string"),

ManagedServicesCustomerManagedKeyId: pulumi.String("string"),

PrivateAccessSettingsId: pulumi.String("string"),

StorageConfigurationId: pulumi.String("string"),

StorageCustomerManagedKeyId: pulumi.String("string"),

Token: &databricks.MwsWorkspacesTokenArgs{

Comment: pulumi.String("string"),

LifetimeSeconds: pulumi.Int(0),

TokenId: pulumi.String("string"),

TokenValue: pulumi.String("string"),

},

WorkspaceId: pulumi.String("string"),

AwsRegion: pulumi.String("string"),

Location: pulumi.String("string"),

WorkspaceStatusMessage: pulumi.String("string"),

Cloud: pulumi.String("string"),

})

var mwsWorkspacesResource = new MwsWorkspaces("mwsWorkspacesResource", MwsWorkspacesArgs.builder()

.accountId("string")

.workspaceName("string")

.isNoPublicIpEnabled(false)

.workspaceStatus("string")

.creationTime(0)

.credentialsId("string")

.customTags(Map.of("string", "any"))

.deploymentName("string")

.externalCustomerInfo(MwsWorkspacesExternalCustomerInfoArgs.builder()

.authoritativeUserEmail("string")

.authoritativeUserFullName("string")

.customerName("string")

.build())

.gcpManagedNetworkConfig(MwsWorkspacesGcpManagedNetworkConfigArgs.builder()

.gkeClusterPodIpRange("string")

.gkeClusterServiceIpRange("string")

.subnetCidr("string")

.build())

.gkeConfig(MwsWorkspacesGkeConfigArgs.builder()

.connectivityType("string")

.masterIpRange("string")

.build())

.workspaceUrl("string")

.cloudResourceContainer(MwsWorkspacesCloudResourceContainerArgs.builder()

.gcp(MwsWorkspacesCloudResourceContainerGcpArgs.builder()

.projectId("string")

.build())

.build())

.pricingTier("string")

.networkId("string")

.managedServicesCustomerManagedKeyId("string")

.privateAccessSettingsId("string")

.storageConfigurationId("string")

.storageCustomerManagedKeyId("string")

.token(MwsWorkspacesTokenArgs.builder()

.comment("string")

.lifetimeSeconds(0)

.tokenId("string")

.tokenValue("string")

.build())

.workspaceId("string")

.awsRegion("string")

.location("string")

.workspaceStatusMessage("string")

.cloud("string")

.build());

mws_workspaces_resource = databricks.MwsWorkspaces("mwsWorkspacesResource",

account_id="string",

workspace_name="string",

is_no_public_ip_enabled=False,

workspace_status="string",

creation_time=0,

credentials_id="string",

custom_tags={

"string": "any",

},

deployment_name="string",

external_customer_info=databricks.MwsWorkspacesExternalCustomerInfoArgs(

authoritative_user_email="string",

authoritative_user_full_name="string",

customer_name="string",

),

gcp_managed_network_config=databricks.MwsWorkspacesGcpManagedNetworkConfigArgs(

gke_cluster_pod_ip_range="string",

gke_cluster_service_ip_range="string",

subnet_cidr="string",

),

gke_config=databricks.MwsWorkspacesGkeConfigArgs(

connectivity_type="string",

master_ip_range="string",

),

workspace_url="string",

cloud_resource_container=databricks.MwsWorkspacesCloudResourceContainerArgs(

gcp=databricks.MwsWorkspacesCloudResourceContainerGcpArgs(

project_id="string",

),

),

pricing_tier="string",

network_id="string",

managed_services_customer_managed_key_id="string",

private_access_settings_id="string",

storage_configuration_id="string",

storage_customer_managed_key_id="string",

token=databricks.MwsWorkspacesTokenArgs(

comment="string",

lifetime_seconds=0,

token_id="string",

token_value="string",

),

workspace_id="string",

aws_region="string",

location="string",

workspace_status_message="string",

cloud="string")

const mwsWorkspacesResource = new databricks.MwsWorkspaces("mwsWorkspacesResource", {

accountId: "string",

workspaceName: "string",

isNoPublicIpEnabled: false,

workspaceStatus: "string",

creationTime: 0,

credentialsId: "string",

customTags: {

string: "any",

},

deploymentName: "string",

externalCustomerInfo: {

authoritativeUserEmail: "string",

authoritativeUserFullName: "string",

customerName: "string",

},

gcpManagedNetworkConfig: {

gkeClusterPodIpRange: "string",

gkeClusterServiceIpRange: "string",

subnetCidr: "string",

},

gkeConfig: {

connectivityType: "string",

masterIpRange: "string",

},

workspaceUrl: "string",

cloudResourceContainer: {

gcp: {

projectId: "string",

},

},

pricingTier: "string",

networkId: "string",

managedServicesCustomerManagedKeyId: "string",

privateAccessSettingsId: "string",

storageConfigurationId: "string",

storageCustomerManagedKeyId: "string",

token: {

comment: "string",

lifetimeSeconds: 0,

tokenId: "string",

tokenValue: "string",

},

workspaceId: "string",

awsRegion: "string",

location: "string",

workspaceStatusMessage: "string",

cloud: "string",

});

type: databricks:MwsWorkspaces

properties:

accountId: string

awsRegion: string

cloud: string

cloudResourceContainer:

gcp:

projectId: string

creationTime: 0

credentialsId: string

customTags:

string: any

deploymentName: string

externalCustomerInfo:

authoritativeUserEmail: string

authoritativeUserFullName: string

customerName: string

gcpManagedNetworkConfig:

gkeClusterPodIpRange: string

gkeClusterServiceIpRange: string

subnetCidr: string

gkeConfig:

connectivityType: string

masterIpRange: string

isNoPublicIpEnabled: false

location: string

managedServicesCustomerManagedKeyId: string

networkId: string

pricingTier: string

privateAccessSettingsId: string

storageConfigurationId: string

storageCustomerManagedKeyId: string

token:

comment: string

lifetimeSeconds: 0

tokenId: string

tokenValue: string

workspaceId: string

workspaceName: string

workspaceStatus: string

workspaceStatusMessage: string

workspaceUrl: string

MwsWorkspaces Resource Properties

To learn more about resource properties and how to use them, see Inputs and Outputs in the Architecture and Concepts docs.

Inputs

The MwsWorkspaces resource accepts the following input properties:

- Account

Id string - Account Id that could be found in the top right corner of Accounts Console.

- Workspace

Name string - name of the workspace, will appear on UI.

- Aws

Region string - region of VPC.

- Cloud string

- Cloud

Resource MwsContainer Workspaces Cloud Resource Container - A block that specifies GCP workspace configurations, consisting of following blocks:

- Creation

Time int - (Integer) time when workspace was created

- Credentials

Id string - Dictionary<string, object>

- The custom tags key-value pairing that is attached to this workspace. These tags will be applied to clusters automatically in addition to any

default_tagsorcustom_tagson a cluster level. Please note it can take up to an hour for custom_tags to be set due to scheduling on Control Plane. After custom tags are applied, they can be modified however they can never be completely removed. - Customer

Managed stringKey Id - Deployment

Name string - part of URL as in

https://<prefix>-<deployment-name>.cloud.databricks.com. Deployment name cannot be used until a deployment name prefix is defined. Please contact your Databricks representative. Once a new deployment prefix is added/updated, it only will affect the new workspaces created. - External

Customer MwsInfo Workspaces External Customer Info - Gcp

Managed MwsNetwork Config Workspaces Gcp Managed Network Config - Gke

Config MwsWorkspaces Gke Config - A block that specifies GKE configuration for the Databricks workspace:

- Is

No boolPublic Ip Enabled - Location string

- region of the subnet.

- Managed

Services stringCustomer Managed Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toMANAGED_SERVICES. This is used to encrypt the workspace's notebook and secret data in the control plane.- Network

Id string network_idfrom networks.- Pricing

Tier string - The pricing tier of the workspace.

- Private

Access stringSettings Id - Canonical unique identifier of databricks.MwsPrivateAccessSettings in Databricks Account.

- Storage

Configuration stringId storage_configuration_idfrom storage configuration.- Storage

Customer stringManaged Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toSTORAGE. This is used to encrypt the DBFS Storage & Cluster Volumes.- Token

Mws

Workspaces Token - Workspace

Id string - (String) workspace id

- Workspace

Status string - (String) workspace status

- Workspace

Status stringMessage - (String) updates on workspace status

- Workspace

Url string - (String) URL of the workspace

- Account

Id string - Account Id that could be found in the top right corner of Accounts Console.

- Workspace

Name string - name of the workspace, will appear on UI.

- Aws

Region string - region of VPC.

- Cloud string

- Cloud

Resource MwsContainer Workspaces Cloud Resource Container Args - A block that specifies GCP workspace configurations, consisting of following blocks:

- Creation

Time int - (Integer) time when workspace was created

- Credentials

Id string - map[string]interface{}

- The custom tags key-value pairing that is attached to this workspace. These tags will be applied to clusters automatically in addition to any

default_tagsorcustom_tagson a cluster level. Please note it can take up to an hour for custom_tags to be set due to scheduling on Control Plane. After custom tags are applied, they can be modified however they can never be completely removed. - Customer

Managed stringKey Id - Deployment

Name string - part of URL as in

https://<prefix>-<deployment-name>.cloud.databricks.com. Deployment name cannot be used until a deployment name prefix is defined. Please contact your Databricks representative. Once a new deployment prefix is added/updated, it only will affect the new workspaces created. - External

Customer MwsInfo Workspaces External Customer Info Args - Gcp

Managed MwsNetwork Config Workspaces Gcp Managed Network Config Args - Gke

Config MwsWorkspaces Gke Config Args - A block that specifies GKE configuration for the Databricks workspace:

- Is

No boolPublic Ip Enabled - Location string

- region of the subnet.

- Managed

Services stringCustomer Managed Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toMANAGED_SERVICES. This is used to encrypt the workspace's notebook and secret data in the control plane.- Network

Id string network_idfrom networks.- Pricing

Tier string - The pricing tier of the workspace.

- Private

Access stringSettings Id - Canonical unique identifier of databricks.MwsPrivateAccessSettings in Databricks Account.

- Storage

Configuration stringId storage_configuration_idfrom storage configuration.- Storage

Customer stringManaged Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toSTORAGE. This is used to encrypt the DBFS Storage & Cluster Volumes.- Token

Mws

Workspaces Token Args - Workspace

Id string - (String) workspace id

- Workspace

Status string - (String) workspace status

- Workspace

Status stringMessage - (String) updates on workspace status

- Workspace

Url string - (String) URL of the workspace

- account

Id String - Account Id that could be found in the top right corner of Accounts Console.

- workspace

Name String - name of the workspace, will appear on UI.

- aws

Region String - region of VPC.

- cloud String

- cloud

Resource MwsContainer Workspaces Cloud Resource Container - A block that specifies GCP workspace configurations, consisting of following blocks:

- creation

Time Integer - (Integer) time when workspace was created

- credentials

Id String - Map<String,Object>

- The custom tags key-value pairing that is attached to this workspace. These tags will be applied to clusters automatically in addition to any

default_tagsorcustom_tagson a cluster level. Please note it can take up to an hour for custom_tags to be set due to scheduling on Control Plane. After custom tags are applied, they can be modified however they can never be completely removed. - customer

Managed StringKey Id - deployment

Name String - part of URL as in

https://<prefix>-<deployment-name>.cloud.databricks.com. Deployment name cannot be used until a deployment name prefix is defined. Please contact your Databricks representative. Once a new deployment prefix is added/updated, it only will affect the new workspaces created. - external

Customer MwsInfo Workspaces External Customer Info - gcp

Managed MwsNetwork Config Workspaces Gcp Managed Network Config - gke

Config MwsWorkspaces Gke Config - A block that specifies GKE configuration for the Databricks workspace:

- is

No BooleanPublic Ip Enabled - location String

- region of the subnet.

- managed

Services StringCustomer Managed Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toMANAGED_SERVICES. This is used to encrypt the workspace's notebook and secret data in the control plane.- network

Id String network_idfrom networks.- pricing

Tier String - The pricing tier of the workspace.

- private

Access StringSettings Id - Canonical unique identifier of databricks.MwsPrivateAccessSettings in Databricks Account.

- storage

Configuration StringId storage_configuration_idfrom storage configuration.- storage

Customer StringManaged Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toSTORAGE. This is used to encrypt the DBFS Storage & Cluster Volumes.- token

Mws

Workspaces Token - workspace

Id String - (String) workspace id

- workspace

Status String - (String) workspace status

- workspace

Status StringMessage - (String) updates on workspace status

- workspace

Url String - (String) URL of the workspace

- account

Id string - Account Id that could be found in the top right corner of Accounts Console.

- workspace

Name string - name of the workspace, will appear on UI.

- aws

Region string - region of VPC.

- cloud string

- cloud

Resource MwsContainer Workspaces Cloud Resource Container - A block that specifies GCP workspace configurations, consisting of following blocks:

- creation

Time number - (Integer) time when workspace was created

- credentials

Id string - {[key: string]: any}

- The custom tags key-value pairing that is attached to this workspace. These tags will be applied to clusters automatically in addition to any

default_tagsorcustom_tagson a cluster level. Please note it can take up to an hour for custom_tags to be set due to scheduling on Control Plane. After custom tags are applied, they can be modified however they can never be completely removed. - customer

Managed stringKey Id - deployment

Name string - part of URL as in

https://<prefix>-<deployment-name>.cloud.databricks.com. Deployment name cannot be used until a deployment name prefix is defined. Please contact your Databricks representative. Once a new deployment prefix is added/updated, it only will affect the new workspaces created. - external

Customer MwsInfo Workspaces External Customer Info - gcp

Managed MwsNetwork Config Workspaces Gcp Managed Network Config - gke

Config MwsWorkspaces Gke Config - A block that specifies GKE configuration for the Databricks workspace:

- is

No booleanPublic Ip Enabled - location string

- region of the subnet.

- managed

Services stringCustomer Managed Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toMANAGED_SERVICES. This is used to encrypt the workspace's notebook and secret data in the control plane.- network

Id string network_idfrom networks.- pricing

Tier string - The pricing tier of the workspace.

- private

Access stringSettings Id - Canonical unique identifier of databricks.MwsPrivateAccessSettings in Databricks Account.

- storage

Configuration stringId storage_configuration_idfrom storage configuration.- storage

Customer stringManaged Key Id customer_managed_key_idfrom customer managed keys withuse_casesset toSTORAGE. This is used to encrypt the DBFS Storage & Cluster Volumes.- token

Mws

Workspaces Token - workspace

Id string - (String) workspace id

- workspace

Status string - (String) workspace status

- workspace

Status stringMessage - (String) updates on workspace status

- workspace

Url string - (String) URL of the workspace

- account_

id str - Account Id that could be found in the top right corner of Accounts Console.

- workspace_

name str - name of the workspace, will appear on UI.

- aws_

region str - region of VPC.

- cloud str

- cloud_

resource_ Mwscontainer Workspaces Cloud Resource Container Args - A block that specifies GCP workspace configurations, consisting of following blocks:

- creation_

time int - (Integer) time when workspace was created

- credentials_

id str - Mapping[str, Any]

- The custom tags key-value pairing that is attached to this workspace. These tags will be applied to clusters automatically in addition to any

default_tagsorcustom_tagson a cluster level. Please note it can take up to an hour for custom_tags to be set due to scheduling on Control Plane. After custom tags are applied, they can be modified however they can never be completely removed. - customer_

managed_ strkey_ id - deployment_

name str - part of URL as in

https://<prefix>-<deployment-name>.cloud.databricks.com. Deployment name cannot be used until a deployment name prefix is defined. Please contact your Databricks representative. Once a new deployment prefix is added/updated, it only will affect the new workspaces created. - external_

customer_ Mwsinfo Workspaces External Customer Info Args - gcp_

managed_ Mwsnetwork_ config Workspaces Gcp Managed Network Config Args - gke_